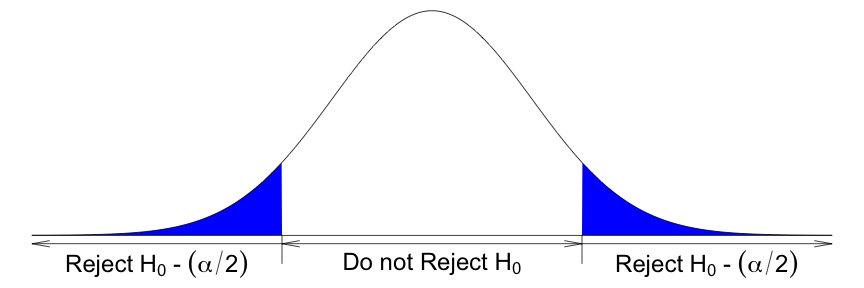

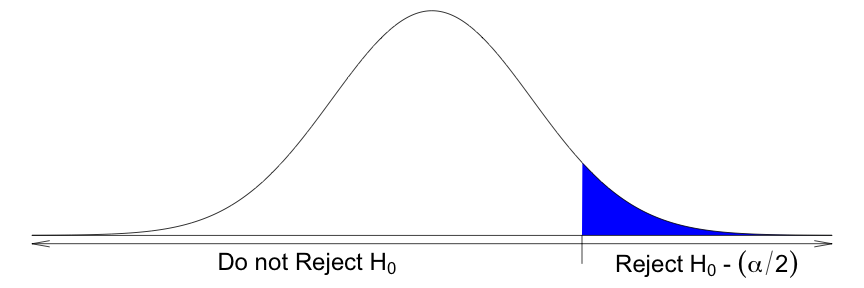

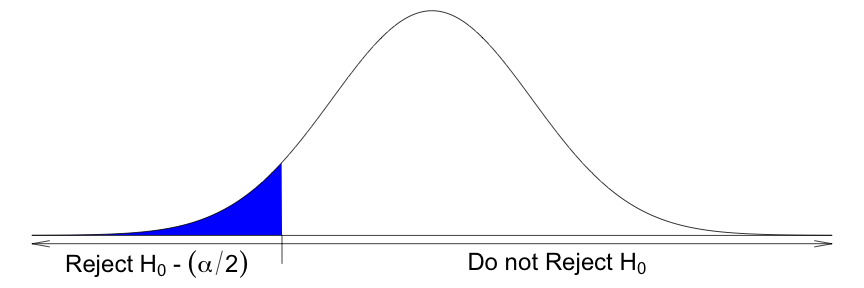

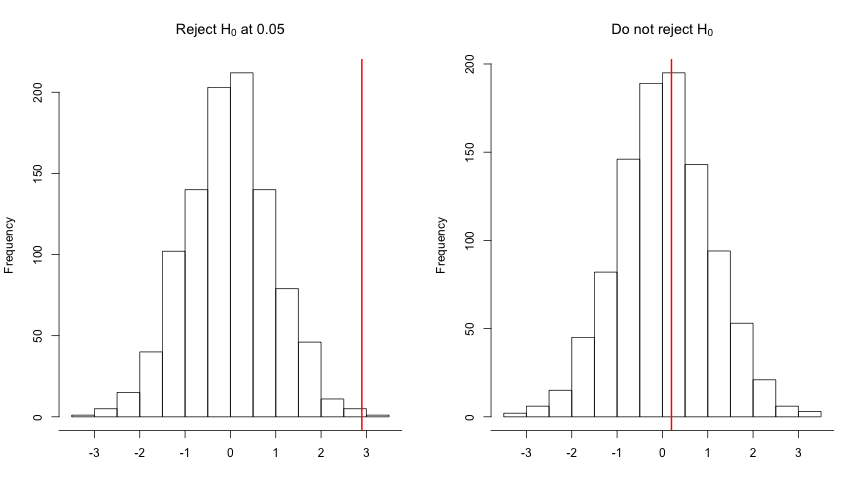

class: title-slide, middle <img src="assets/img/pr.png" width="80px" style="padding-right:10px; border-right: 2px solid #FAFAFA;"></img> <img src="assets/img/UdeS_blanc.png" width="350px" ></img> # Trend surface estimation .instructors[ GSFE01 - F. Guillaume Blanchet & Steve Vissault ] --- class: clear, middle ## <i class="fab fa-slideshare "></i> Slides available at: https://steveviss.github.io/PR-GSFE01/trend_surface/index.html ## <i class="fas fa-tag "></i> Practical: https://steveviss.github.io/PR-GSFE01/trend_surface/practice.html --- class: inverse, center, middle # Trend surface analysis <html><div style='float:left'></div><hr color='#EB811B' size=1px width=720px></html> --- # Trend surface estimation Trend surface estimation (or analysis) is a fancy wording used to describe a special type of **regression analysis** where a set of spatiotemporal explanatory variables are used to account for *all* the spatiotemporal structure. ## Properties - Straightforward to implement (uses regression functions available broadly) - Accounts for model error (usually assumed to be independent) - Can be used to calculate predition-error variance --- # Theory Let's assume that we gathered samples at specific times period for all spatial locations considered `$$\begin{bmatrix} Z(\mathbf{s}_{1_1}, t_1) & \dots & Z(\mathbf{s}_{i_1}, t_1) & \dots & Z(\mathbf{s}_{m_1}, t_1)\\ \vdots & & \vdots & & \vdots\\ Z(\mathbf{s}_{1_j}, t_j) & \dots & Z(\mathbf{s}_{i_j}, t_j) & \dots & Z(\mathbf{s}_{m_j}, t_j)\\ \vdots & & \vdots & & \vdots\\ Z(\mathbf{s}_{1_T}, t_T) & \dots & Z(\mathbf{s}_{i_T}, t_T) & \dots & Z(\mathbf{s}_{m_T}, t_T)\\ \end{bmatrix}$$` --- # Theory Based on the structure of the data previously mentionned, we can write our trend surface model .small[$$Z(\mathbf{s}_i, t_j) = \beta_0 + \beta_1X_1(\mathbf{s}_i, t_j) + \dots + \beta_kX_k(\mathbf{s}_i, t_j) + \dots + \beta_pX_p(\mathbf{s}_i, t_j) + \varepsilon(\mathbf{s}_i, t_j)$$] where - `\(\beta_0\)` is the model intercept - `\(\beta_k\)` is a regression coefficient associated to explanatory variable `\(X_k(\mathbf{s}_i, t_j)\)` - `\(X_k(\mathbf{s}_i, t_j)\)` is an explanatory variable gathered at location `\(\mathbf{s}_i\)` at time `\(t_j\)` - `\(\varepsilon(\mathbf{s}_i, t_j) \sim N(0, \sigma^2)\)` - `\(\sigma^2\)` is the variance --- # Properties of explanatory variables In a spatiotemporal context, explanatory variables can have different characteristics - Represent spatial variations but be temporally invariant (e.g. elevation) - Represent temporal variations but be spatially invariant (e.g. seasonality) - Represent spatial *and* temporal variations (e.g. humidity) - Be a purely spatial or temporal construction (e.g. xy-coordinates) - Be a purely temporal construction (e.g. day of the year) [Painting Species distribution model paper](paper/Fourcade_et_al_2018.pdf) --- # Basis functions Basis functions rely on the idea that a complex curve (or surface) can be decomposed into a *linear combination* of some .red[elemental] (or basis) functions. This results in a model that looks like `$$Z(\mathbf{s}_i, t_j) = \alpha_1\phi_1(\mathbf{s}_i, t_j) + \dots + \alpha_l\phi_l(\mathbf{s}_i, t_j) + \dots + \alpha_r\phi_r(\mathbf{s}_i, t_j) + \varepsilon(\mathbf{s}_i, t_j)$$` where - `\(\phi_l(\mathbf{s})\)` is the `\(l^\text{th}\)` spatial basis function - `\(\alpha_l\)` is an estimated parameter associated to `\(\phi_l(\mathbf{s})\)` --- # Basis functions When we use basis functions we assume that - Basis function are known (as they would for other explanatory variables) - Parameters need to be estimated (they are not known) Basis function can be **Local** : They can span only a section of the surveyed area **Gloal** : They can span the full survey area --- # Example of trend surface analysis <img src="index_files/figure-html/unnamed-chunk-1-1.png" width="864" style="display: block; margin: auto;" /> **Note**: For illustration purposes let's assume that there are no error in the model. In other words, `\(\varepsilon(\mathbf{s}) = 0\)` --- # Estimating trend surface models Because trend surface models are regressions, they are estimated using the same approaches as regression. A usual way used to estimate the parameters ( `\(\beta_0,\dots,\beta_p\)` ) is to use *ordinary least squares*, where the goal is to minimize the model's residual's sums of squares (RSS) `$$\text{RSS} = \sum_{j=1}^T\sum_{i=1}^m \left(Z(\mathbf{s}_i, t_j) - \widehat{Z}(\mathbf{s}_i, t_j)\right)^2$$` where `\(\widehat{Z}(\mathbf{s}_i, t_j)\)` is the model fitted values. --- # Estimating trend surface models ## Modelled variance Using RSS, we can also calculate an estimate of the variance parameter `$$\widehat\sigma^2_\varepsilon = \frac{\text{RSS}}{mT-p-1}.$$` Recall that - `\(m\)` is the number of spatial locations sampled - `\(T\)` is the number of temporal period sampled - `\(p\)` is the number of explanatory variables ## Feature of trend surface models - Can be used to make prediction (when the covariates are available) - Uncertainty can be obtained for these predictions - Which can be used to calculate, e.g., confidence intervals --- # Different types of models In the previous slides, we assumed that the error follows a Gaussian distribution. Using **generalized linear models**, trend surface models can be applied to many other data type as long as we are careful about the link function to use. .small[ |Data | Link name | Link function `\((\mathbf{X}\boldsymbol\beta = g(\mu))\)` | | :----: | :----: | :----: | | Gaussian | Identity | `\(\mathbf{X}\boldsymbol\beta = \mu\)` | | Poisson | Log | `\(\mathbf{X}\boldsymbol\beta = \ln(\mu)\)` | | Negative binomial | Log | `\(\mathbf{X}\boldsymbol\beta = \ln(\mu)\)` | | Presence-absence | Logit | `\(\mathbf{X}\boldsymbol\beta = \ln\left(\frac{\mu}{1-\mu}\right)\)` | | Presence-absence | Probit | `\(\mathbf{X}\boldsymbol\beta = \Phi^{-1}(\mu)\)` <br> .footnotesize[(inverse Gaussian cumulative density function)] | | Exponential | Negative inverse | `\(\mathbf{X}\boldsymbol\beta = -\mu^{-1}\)` | | Gamma | Negative inverse | `\(\mathbf{X}\boldsymbol\beta = -\mu^{-1}\)` | ] --- class: inverse, center, middle # Generalized additive models <html><div style='float:left'></div><hr color='#EB811B' size=1px width=720px></html> --- # Generalized additive model ## A bit of history Additive model were first proposed by <img src="assets/img/Friedman_Stuetzle.png" width="50%" /> In the paper published in 1981 in the Journal of the American Statistical Association <img src="assets/img/Additive_model_paper.png" width="80%" /> --- # Generalized additive model ## A bit of history .pull-left[ As for generalized additive models, it was developped by <img src="assets/img/Hastie_Tibshirani.png" width="100%" style="display: block; margin: auto;" /> ] .pull-right[ In the book published in 1990 <img src="assets/img/GAM_book_TH.jpeg" width="60%" style="display: block; margin: auto;" /> ] --- # Generalized additive model .footnotesize[ Additive models are a form of linear models. A logistic additive model with a single explanatory variable can be defined as: `$$\text{logit}(P(y_i = 1)) = f(x_i)$$` where `$$f(x) = \sum_{j = 1}^k b_j(x)\beta_j$$` `\(b_j(x)\)` - `\(j^\text{th}\)` basis function of the explanatory variable `\(x\)` `\(\beta_j(x)\)` - `\(j^\text{th}\)` parameters associated to the basis function of the explanatory variable `\(x\)` ] -- This is some pretty dense theory... <img src="assets/img/grinning-face-with-one-large-and-one-small-eye_1f92a.png" width="10%" style="display: block; margin: auto;" /> .footnotesize[ Let's clarify what `\(f(x)\)` is about. The `\(f(x)\)` function is a general way to representation many different formulations of an additive model. ] --- # Generalized additive model An example of `\(f(x)\)` is a polynomial regression of degree 5, that is `$$\text{logit}(P(y_i = 1)) = f(x_i)$$` where `$$f(x) = \sum_{j = 0}^5 b_j(x)\beta_j$$` and `$$b_j(x) = x^j$$` which can be rewritten as `$$\text{logit}(P(y_i = 1)) = \beta_0 + x\beta_1 + x^2\beta_2 + x^3\beta_3 + x^4\beta_4 + x^5\beta_5$$` --- # Generalized additive model Visually this can be represented as <img src="index_files/figure-html/unnamed-chunk-7-1.png" style="display: block; margin: auto;" /> --- # Generalized additive model The polynomial base function is one of many base function used in generalized additive models, sadly it is actually not very good. Ones more commonly used is the cubic regression splines <img src="assets/img/cubic_spline.png" width="100%" style="display: block; margin: auto;" /> --- # Generalized additive model ## Cubic regression spline ### Advantage - More flexible than a polynomial regression ### Disadvantage - Deciding how many knots to place is subjective - Deciding where to place the knots is subjective - It can only be applied to a single variable --- # Generalized additive model On our data a cubic regression spline results in <img src="index_files/figure-html/unnamed-chunk-9-1.png" style="display: block; margin: auto;" /> --- # Generalized additive model ## Thin plate regression spline The thin plate regression spline can be understood by thinking of an elastic mosquito mesh... What can we do with an elastic mosquito mesh to fit to an object? --- # Generalized additive model ## Thin plate regression spline The thin plate regression spline can be understood by thinking of an elastic mosquito mesh... What can we do with an elastic mosquito mesh to fit to an object? - Translate - Rotate - Stretch (scale, compression, dilation) - Shear --- # Generalized additive model ## Thin plate regression spline ### Advantage - More flexible than a polynomial regression - No knots are needed - Can be applied to multiple variables at once - Computationally efficient... ish ### Disadvantage - Interpreting the meaning of each thin plate spline is challenging --- # Generalized additive model On our data a thin plate regression spline results in <img src="index_files/figure-html/unnamed-chunk-10-1.png" style="display: block; margin: auto;" /> --- class: inverse, center, middle # Model diagnostics <html><div style='float:left'></div><hr color='#EB811B' size=1px width=720px></html> --- # Model diagnostics Model diagnostics are tests or statistics used to verify assumptions of a model. In regression analysis model diagnostics to check for: - Homogeneity of variance - Normality - Presence of outliers - ... In this course we will be mainly concern about **dependence error**. .full-width[.content-box-green[**Dependence error** Samples are not independent among each other.]] --- # Checking for spatial dependence .footnotesize[ The *Moran's `\(I\)` statistics* can be defined as follow: `$$I=\frac{m\sum_{i=1}^m\sum_{j=1}^mw_{ij}\left(Z_i - \overline{Z}\right)\left(Z_j - \overline{Z}\right)}{\left(\sum_{i=1}^m\sum_{j=1}^mw_{ij}\right)\left(\sum_{i=1}^m\left(Z_i - \overline{Z}\right)^2\right)}$$` where - `\(Z_i\)` is the spatial data (it could also be the residuals) - `\(\overline Z\)` is the mean of the data (or of the residuals) across the full sampling area - `\(w_{ij}\)` is the strength of the relation between samples `\(i\)` and `\(j\)` - `\(w_{ii}\)` needs to be 0 for all samples In words, Moran's `\(I\)`, is a weighted form of Pearson's correlation. ] ## Properties of Moran's `\(I\)` .footnotesize[ - If `\(I\)` is positive, it suggest there is positive spatial autocorrelation - If `\(I\)` is negative, it suggest there is negative spatial autocorrelation - As `\(I\)` is close to 0, it suggest there no spatial autocorrelation - The value of `\(I\)` ranges theoretically from -1 to 1 ] --- class: inverse, center, middle # Testing these statistics <html><div style='float:left'></div><hr color='#EB811B' size=1px width=720px></html> --- # Parametric tests Parametric testing has many constraints and generally supposes that several conditions are fulfilled for the test to be valid. ## Conditions * Observations must be independent from one another. This means that autocorrelated data violate this principle, their error terms being correlated across observations * The data most follow some well-known theoretical distribution, most often the normal distribution. --- # Parametric tests When the conditions of a given test are fulfilled, an auxiliary variable constructed from one or several parameters estimated from the data (for instance an `\(F\)` or `\(t\)`-statistic) has a known behaviour under the null hypothesis ( `\(H_0\)` ). It thus becomes possible to ascertain whether the observed value of that statistic is likely or not to occur if `\(H_0\)` should be rejected or not. If the observed value is as extreme or more extreme than the value of the reference statistic for a pre-established probability level (usually `\(\alpha\)` = 0.05), then `\(H_0\)` is rejected. If not, `\(H_0\)` is not rejected. --- # Parametric tests ## Decision in statistical testing ### Two-tailed test <!-- --> --- # Parametric tests ## Decision in statistical testing ### One-tailed test (right) <!-- --> --- # Parametric tests ## Decision in statistical testing ### One-tailed test (left) <!-- --> --- # Permutation test Parametric tests are rarely usable with ecological data, mainly because ecological data rarely follow the normal distribution, on which many statistical techniques are based on. Even data transformations often do not manage to normalize the data. In these conditions, another, very elegant but computationally more intensive approach is available: **testing by random permutations**. --- # Principal of permutation testing 1. If no theoretical reference distribution is available, then generate a reference distribution under `\(H_0\)` from the data themselves. This is achieved by permuting the data randomly in a scheme that ensures `\(H_0\)` to be true, and recomputing the test statistic. 2. Repeat the procedure a large number of times. 3. Compare the statistic calculated with the observed data with the set of test statistics obtained by permutations. If the observed value is as extreme or more extreme than, say, the 5% most extreme values obtained under permutations, then it is considered too extreme for `\(H_0\)` to be true and thus `\(H_0\)` is rejected. --- # Principal of permutation testing ## Example using the Pearson's correlation ( `\(r\)` ) .small[ |Var. 1|Var. 2|Perm. 1|Perm. 2|Perm. 3| |:-:|:-:|:-:|:-:|:-:| | 1 | 4 | 4 | 10 | 11 | | 3 | 5 | 5 | 8 | 8 | | 2 | 3 | 8 | 4 | 14 | | 4 | 6 | 10 | 6 | 5 | | 3 | 8 | 11 | 3 | 3 | | 6 | 7 | 9 | 7 | 10 | | 7 | 9 | 3 | 5 | 6 | | 5 | 10 | 14 | 14 | 9 | | 8 | 11 | 7 | 9 | 7 | | 9 | 14 | 6 | 11 | 4 | | **Pearson's `\(r\)`** | **0.890** | -0.081 | 0.288 | -0474 | ] --- # Principal of permutation testing ## Interpretation of a permutation test Since the columns "Perm.1", "Perm.2", "Perm.3" present randomly permuted values of "Var. 2", the relationship between "Var.1" and "Perm.1" should not present any relationship. As such, the permuted iterations of "Var.2" present realisations of `\(H_0\)`, the null hypothesis of the test: there is no linear relationship between the two variables. If we construct many (e.g. 99, 999 or 9999) random permutations of "Var.2", we can build a distribution from which to compare the observed statistics (calculated on non-permuted data). --- # Principal of permutation testing ## Interpretation of a permutation test <!-- --> --- # Principal of permutation testing ## Calculation permutation-based statistic ### Two-tailed test `\(H_0\)` is rejected at `\(\alpha = 0.05\)` when `$$P = \dfrac{\sum(\text{perm}\leq -|\text{observation}|)+\sum(\text{perm} \ge |\text{observation}|)}{n_\text{perm}+1}$$` ### one-tailed (right) test `\(H_0\)` is rejected at `\(\alpha = 0.05\)` when `$$P = \dfrac{\sum(\text{perm} \ge |\text{observation}|)}{n_\text{perm}+1}$$` --- # Principal of permutation testing ## .footnotesize[Continuing the example of the Pearson's correlation] `\((r)\)` ### Illustration of two-tailed test results <img src="index_files/figure-html/unnamed-chunk-15-1.png" style="display: block; margin: auto;" /> --- # Principal of permutation testing ## .footnotesize[Continuing the example of the Pearson's correlation] `\((r)\)` ### Illustration of one-tailed test, right tail results <img src="index_files/figure-html/unnamed-chunk-16-1.png" style="display: block; margin: auto;" /> --- # Principal of permutation testing ## .footnotesize[Continuing the example of the Pearson's correlation] `\((r)\)` ### Illustration of one-tailed test, left tail results <img src="index_files/figure-html/unnamed-chunk-17-1.png" style="display: block; margin: auto;" /> --- # Permutation testing ## Word of caution Permutation tests are elegant, but they do not solve all problems related to statistical testing. .small[ - Beyond simple cases like the one use in this lecture, other problems may require different and more complicated permutation schemes than the simple random scheme applied here. - For example, the tests of the main factors of an ANOVA, where the permutations for one factor must be limited within the levels of factor B, and vice versa, need special permutation procedure. - Permutation tests do solve several, but not all, distributional problems. In particular, **they do not solve distributional problems linked to the hypothesis being tested**. - For example, permutational ANOVA does not require normality, but it still does require homogeneity of variances. ] --- # Permutation testing ## Word of caution .small[ - Permutation tests by themselves **do not** solve the problem of independence of observations. This problem has still to be addressed by special solutions, differing from case to case, and often related to the correction of degrees of freedom. - This is different from our goal where the statistics is used to tests for independence of observations. - Although many statistics can be tested directly by permutations (e.g. Pearson's `\(r\)` as above), it is generally advised to use a pivotal statistic whenever possible (for the `\(r\)` it would be a Student `\(t\)` statistic). A pivotal statistic has a distribution under the null hypothesis which remains the same for any value of the measured effect. - **It is not the statistic itself which determines if a test is parametric or not**, it is the reference to a theoretical distribution (which requires assumptions about the parameters of the statistical population from which the data have been extracted) or to permutations. ] --- # Using these different tests In a regression model such as the one discussed so far, we assume that the samples are independent. Said differently, the **model residuals** should not present any spatial or temporal or spatiotemporal correlations. As such, these tests are usually used to check for structure in a model's residuals. Say our test shows that there is autocorrelation in the model's residuals, what can we do about it ? .Large[.red[Build a more complex model!]] Proposing solutions to this problem is what this course is about! --- class: clear, middle # Study case: Species distribution models ## <i class="fas fa-tag "></i> with GLM: https://steveviss.github.io/PR-GSFE01/trend_surface/practiceGLM.html ## <i class="fas fa-tag "></i> with GAM: https://steveviss.github.io/PR-GSFE01/trend_surface/practiceGAM.html